Apple’s CSAM detection is scheduled to launch later this year with an iOS 15 update. But a developer claims to have harvested the feature’s algorithm from iOS 14.3 He rebuilt the algorithm’s working model and posted it to GubHit to test the system scanning capability.

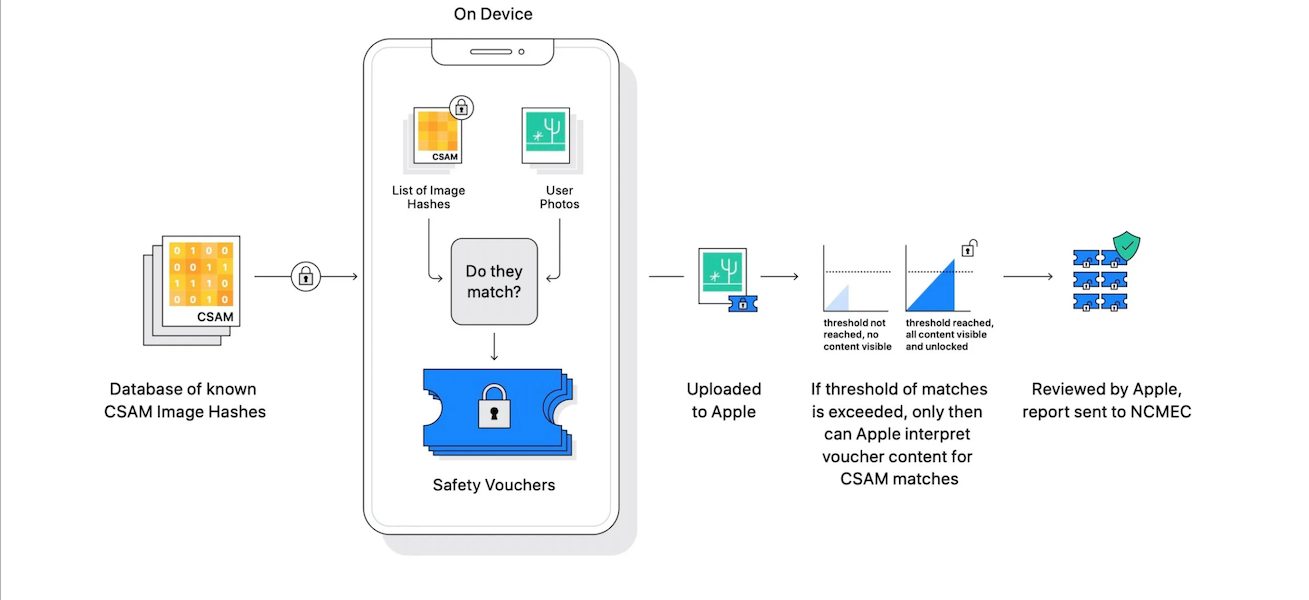

Cupertino tech giant’s upcoming CSAM detection feature will use a NeuralHash system to match users’ photo’s on iCloud for known CSAM. The company’s head of Software, Craig Federighi explained that they trained the system to look for the exact fingerprints of the CSAM database provided by NCMEC and nothing else. As part of the analysis is done on the device as pictures are transferred to the iCloud and the others in the cloud, it might be difficult to deliberately generate false positives.

Developer creates a working model of Apple’s CSAM detection algorithm to test the scanning system

Asuhariet Ygvar found the algorithm in hidden APIs and the NeuralHash under the name MobileNetV3. On Reddit, he expressed that it was the correct algorithm because of its matching prefix and description given in Apple’s documentation.

First of all, the model files have prefix NeuralHashv3b-, which is the same term as in Apple’s document.

Secondly, in this document Apple described the algorithm details in Technology Overview -> NeuralHash section, which is exactly the same as what I discovered. For example, in Apple’s document: “The descriptor is passed through a hashing scheme to convert the N floating-point numbers to M bits. Here, M is much smaller than the number of bits needed to represent the N floating-point numbers.” And as you can see from here and here N=128 and M=96.

Moreover, the hash generated by this script almost doesn’t change if you resize or compress the image, which is again the same as described in Apple’s document.

It is found that the system can not be fooled by compressing or resizing the images, but will not be able to detect rotated or cropped images. To explain why some results were slightly different, he wrote:

It’s because neural networks are based on floating-point calculations. The accuracy is highly dependent on the hardware. For smaller networks it won’t make any difference. But NeuralHash has 200+ layers, resulting in significant cumulative errors. In practice it’s highly likely that Apple will implement the hash comparison with a few bits tolerance.

Based on Ygvar findings, another user was able to create a collision where two unmatching images had the same hash. Following discussion focused on generating images to fool the NeuralHash to gain false positives. Some believed that they can manipulate certain images to match CSAM fingerprints and send them to a user via a messaging app. But others were quick to point out that the scanning system is activated when an image is uploaded on iCloud and an account must have at least 30 CSAM images to trigger a human review. So, it is unlikely that a user would upload random pictures to their iCloud for a false positive attack.

[Update]: Apple told Motherboard that the discovered NeuralHash algorithm is not its final version.

Apple however told Motherboard in an email that that version analyzed by users on GitHub is a generic version, and not the one final version that will be used for iCloud Photos CSAM detection. Apple said that it also made the algorithm public.

Read More:

- The new Corellium Open Security Initiative aims to test Apple’s CSAM related privacy and security claims

- Apple’s head of Privacy assures that privacy of users not engaged in illegal CSAM will not be disturbed

- The new CSAM detection feature will not scan user’s private iPhone photo library, says Apple

- Apple’s new automatic CSAM detection system will be disabled when iCloud is turned off

- Apple CSAM detection feature to launch in the United States with the plan of global expansion