After widespread backlash and criticism, Apple has finally decided to delay the launch of new child protection features which included communication Safety in Messages, CSAM detection, and expanding guidance in Siri and Search. The controversial features were scheduled to roll out users in the United States with the iOS 15 update later this year.

Although designed to protect young children from predators and prevent the spread of child pornography, the mechanics of the CSAM feature, in particular, raised several users’ privacy and safety concerns.

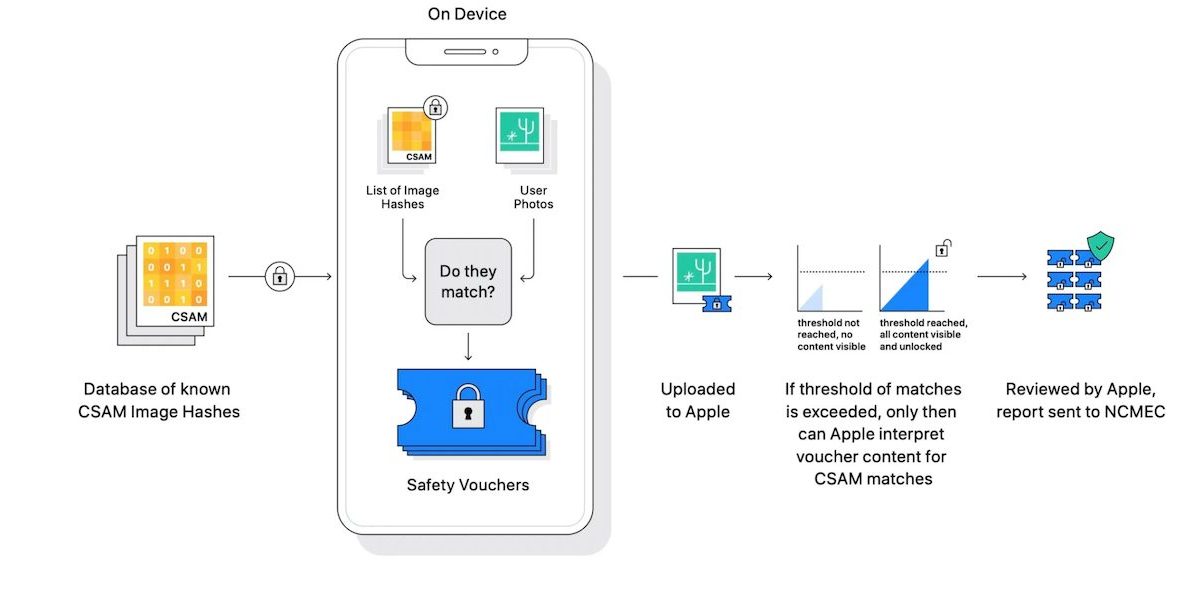

CSAM detection feature will use a NeuralHash system that would scan images for CSAM in two steps. Initial scanning will be done on the users’ devices, as the images are uploaded on the server, to fingerprint them to CSAM hashes provided by NCMEC. The matched image would be stored in a safety voucher and then uploaded on iCloud. If an account’s safety vouchers limit exceeds the 30 images threshold, it will be eligible for human review and other punitive actions, if merited.

Cryptography and digital privacy experts immediately labeled the CSAM detection system as a surveillance tool on users that can be exploited by governments to suppress activists, rival politicians, journalists, and dissidents.

Apple seeks more time to improve the new child protection features before rolling them out

Apple’s announcement message reads:

“Last month we announced plans for features intended to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material. Based on feedback from customers, advocacy groups, researchers and others, we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features.”

Prior to this, Apple did not hint at any plan to delay the features and released more documents to explain the functionality and implementation of CSAM detection and other features. But company’s Head of Software Craig Federighi did accept that they should have put more thought into the announcement of the features because it created a negative sound bite. He still claimed that the new scanning system can not be characterized as a ‘backdoor’. Read more about the controversial child safety features here.

2 comments

Comments are closed.