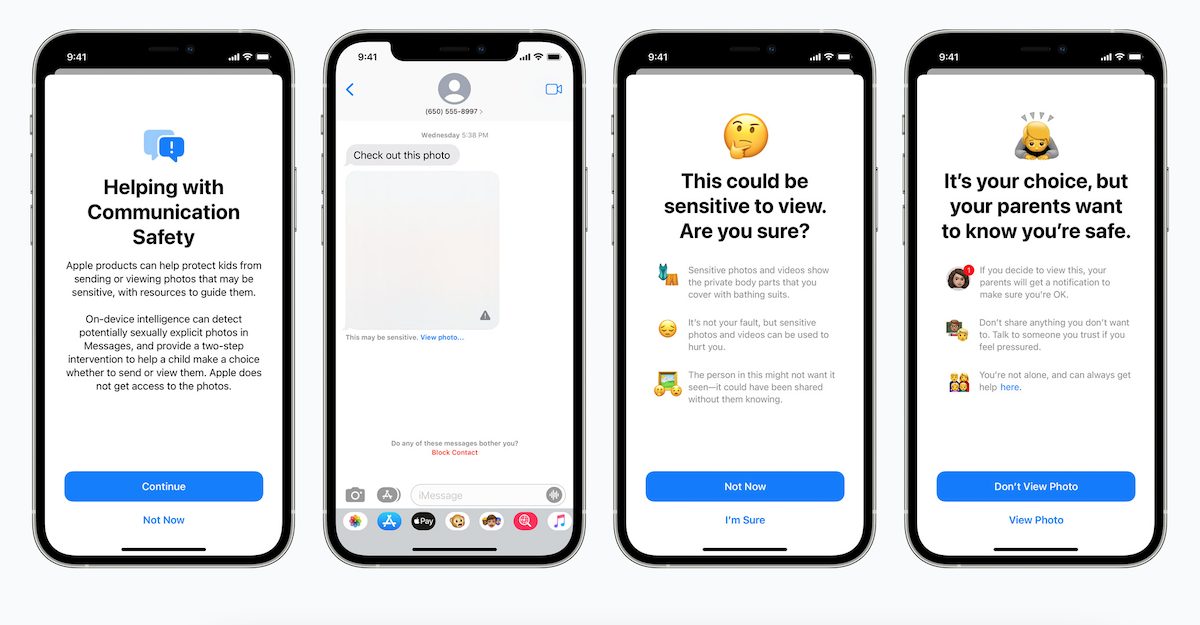

Since Apple announced it was working on a CSAM detection hashing system that gives the company power to analyze iMessage and iCloud for sexually explicit images, it has been faced with criticism from technologists, academics, and policy advocates. Most recently, two Princeton University researchers said that the Cupertino tech giant’s system is flawed because they spent years developing a similar system.

Princeton researchers urge Apple to abandon its CSAM plan

In an editorial for The Washington Post, Jonanath Mayer, assistant professor of computer science and public affairs at Princeton University, and Anunay Kulshrestha, a researcher at the Princeton University Center for Information Technology Policy discussed their experiences with building CSAM detection technology.

Both researchers started working on a project two years ago that was meant to identify CSAM in end-to-end encrypted online services. The researchers have said they know the “value of end-to-end encryption, which protects data from third-party access.” However, they are concerned about CSAM “spreading on encrypted platforms.”

Mayer and Kulshrestha went on to build a CSAM detection system that electronic platforms can use while protecting end-to-end encryption. While experts in the field doubted the functionality of such a system, both researchers did end up building it but were faced with a number of issues.

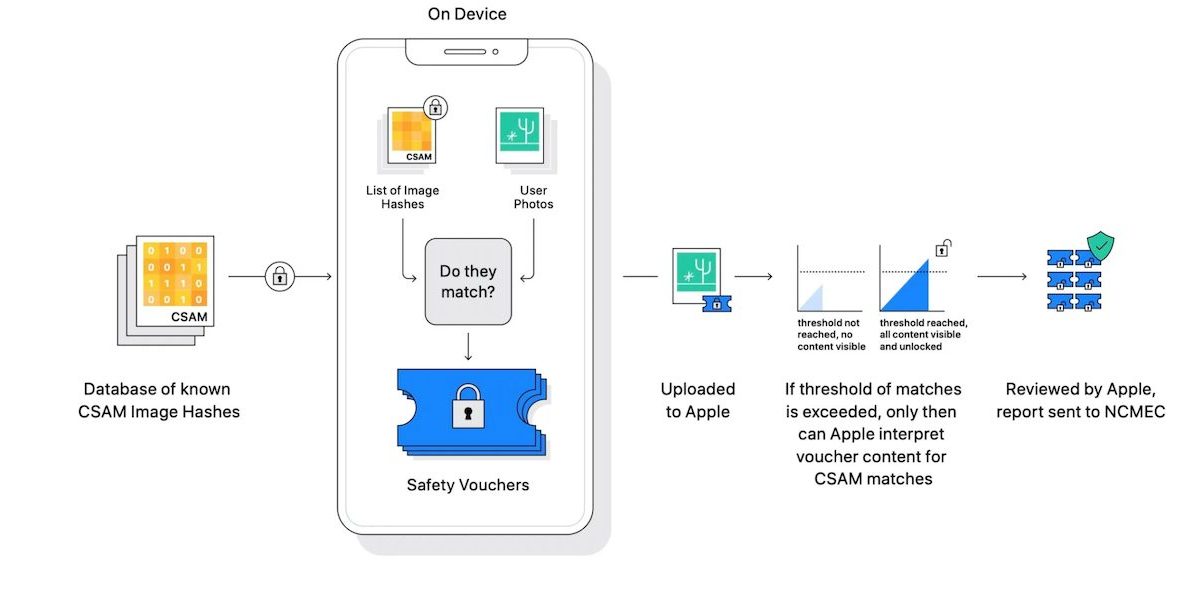

We tried to explore a possible middle ground, where electronic services could identify malicious content while preserving end-to-end encryption. The concept was fine: If anyone shared material that matched a database of known malicious content, the service would be notified. If a person shared innocent content, the service would learn nothing. People could not read the database or find out if the content matched, as the information could reveal law enforcement methods and help criminals avoid being detected.

Knowledgeable observers claimed that a system like ours was far from feasible. After many false starts, we built a working prototype. But we encountered a problem.

Concerns about how CSAM detection can be used for surveillance and censorship are widespread. Mayer and Kulshrestha in particular are concerned about how governments could use the technology to detect content other than CSAM. This possibility left both researchers “disturbed” and now they are informing the Cupertino tech giant of the shortcomings they have discovered.

A foreign government can, for example, force a service to people who share unfavorable political speech. This is not hypothetical: WeChat, the popular Chinese messaging app, already uses content matching to identify dissident material. India adopted rules this year that may require preview of content that is critical of government policy. Russia was recently fined Google, Facebook and Twitter for failing to remove pro-democracy material.

We discovered other shortcomings. The content matching process can have false positives, and malicious users can play the system to expose innocent users to scrutiny.

The editorial comes shortly after more than 90 civil rights groups asked Apple to abandon its CSAM plan in an open letter over concerns that it will be used ” to censor protected speech, threaten the privacy and security of people around the world, and have disastrous consequences for many children.”

Read more:

- The new “Corellium Open Security Initiative” aims to test Apple’s CSAM related privacy and security claims

- Developer claims to have reverse-engineered Apple’s CSAM detection algorithm from iOS 14.3 [U: Apple’s response]

- German journalists appeal to EU Commission to stand against Apple’s CSAM detection

1 comment