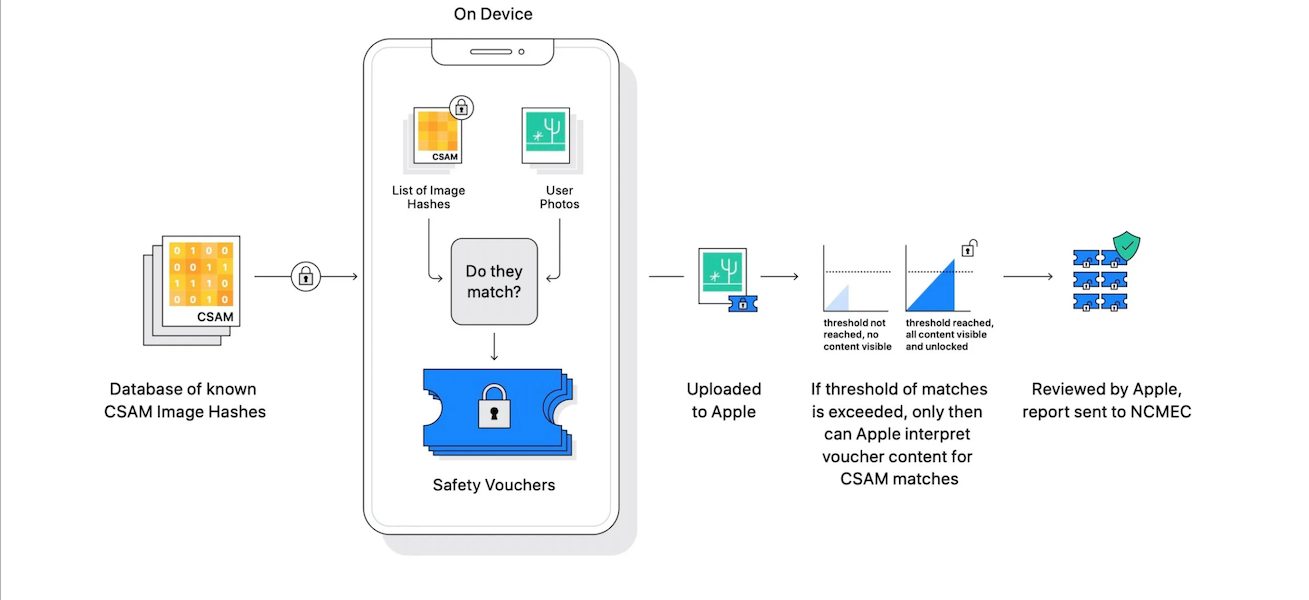

Since the announcement of Apple’s new child safety features, the company has been facing a lot of backlash from digital security and privacy experts. Now, Apple’s employees are also raising concerns over the possible exploitation of “Expanded Protections for Children” by repressive governments, especially the CSAM detecting hashing system.

Releasing in Fall with software updates across devices, the CSAM detection feature will use an on-device hashing system to scan users’ iCloud Photos for CSAM to prevent its spread.

Apple employees are worried that the CSAM detecting system will lead to censorship or arrests

In 2016, the Cupertino tech giant built a reputation by standing up to the FBI and denying its request to create a backdoor to proclaimed the most secure smartphone. Reuters reports that now Apple employees are mainly concerned about the new photo-scanning feature, and are “worried that Apple is damaging its leading reputation for protecting privacy.”

Workers, who have requested to stay anonymous, say that over 800 messages “flooded” the company’s internal Slack channel expressing worries that the CSAM scanning system “could be exploited by repressive governments looking to find other material for censorship or arrests.”

However, core security employees have not participated in the discussion, and some believed that “Apple’s solution was a reasonable response to pressure to crack down on illegal material.”

Although the company assured that it will not allow any government to exploit the CSAM hashing system, critics fear that;

Any country’s legislature or courts could demand that any one of those elements be expanded, and some of those nations, such as China, represent enormous and hard to refuse markets.

Police and other agencies will cite recent laws requiring “technical assistance” in investigating crimes, including in the United Kingdom and Australia, to press Apple to expand this new capability, the EFF said.

A U.K. tech lawyer at decoded.legal said:

“Lawmakers will build on it as well. If Apple demonstrates that, even in just one market, it can carry out on-device content filtering, I would expect regulators/lawmakers to consider it appropriate to demand its use in their own markets, and potentially for an expanded scope of things.”

3 comments

Comments are closed.