Apple has introduced three new child safety features for their protection from predators and to limit the spread of Child Sexual Abuse Material. Developed in collaboration with child safety experts, parents will be able to help their children in online communication, on-device machine learning will scan the Messages app will for sensitive content, and updated Siri and Search will provide parents and children information of and help if they come across unsafe situations.

Apple to introduce measures to ensure children’s safety online and deter the spread of CSAM

The new child safety features will come out in the Fall in iOS 15, iPadOS 15, watchOS 8, and macOS Monterey updates. Here is how each new feature will work.

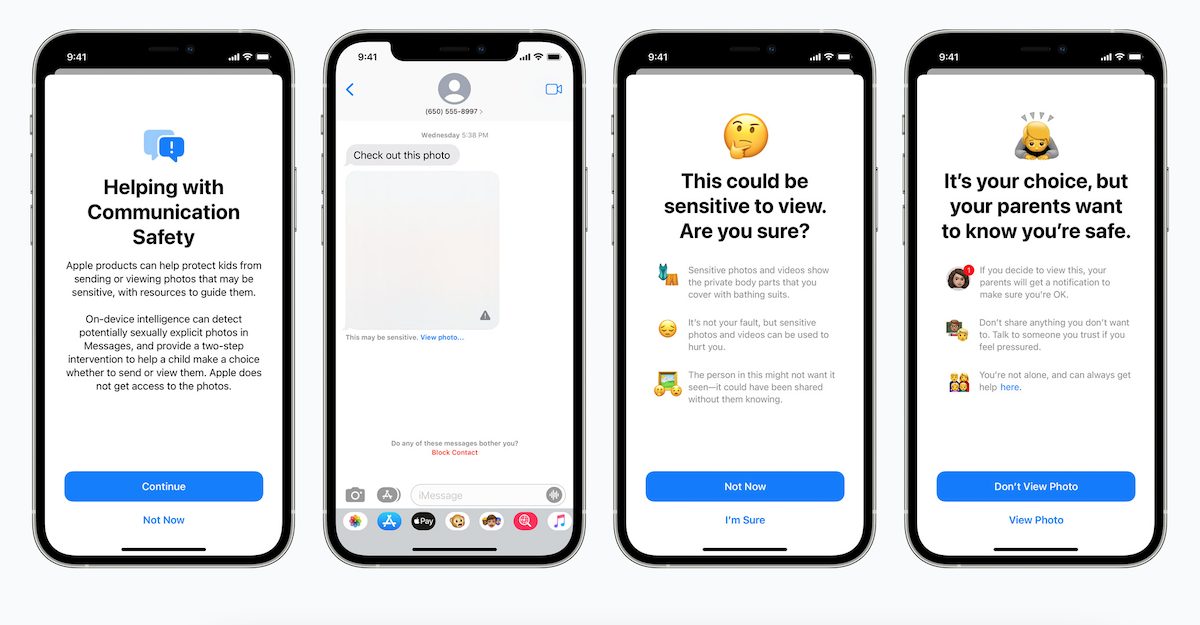

Safe communication in Messages

New tools to warn children and alert their parents will be added in the Messages app. On-device machine learning will analyze send and received images in messages to determine the nature of the shared media.

If a sexually explicit image is received or sent, the photo will be blurred and the child will be notified, helpful resources will be presented and they will be “reassured” it is okay to not view the photo. In addition, the child will also be told that their parents will get the same message if they send or view a sexually explicit photo.

Detection of CSAM to contain its spread

Child Sexual Abuse Material (CSAM) is all content that involves a child in sexually explicit activities. To prevent the spread of CSAM content, Apple will introduce new technology in iOS and iPadOS to scan and detect CSAM images stored in iCloud Photos.

To detect CSAM images, Apple’s new system will carry out on-device matching based on the database of known CSAM image hashes provided by NCMEC and other child safety organizations. The database will be stored on users’ devices as an unreadable set of hashes.

The on-device matching process will be performed before an image is stored in iCloud Photos. The new private set intersection, cryptography technology, will determine a match with CSAM image hashes “without revealing the result” and create a cryptographic safety voucher to be uploaded to iCloud Photos with the image.

Then, the threshold secret sharing technology will set a threshold of CSAM content and will only allow Apple to interpret the content of safety vouchers if the iCloud Photos account exceeds the threshold.

The threshold is set to provide an extremely high level of accuracy and ensures less than a one in one trillion chance per year of incorrectly flagging a given account.

Only when the threshold is exceeded does the cryptographic technology allow Apple to interpret the contents of the safety vouchers associated with the matching CSAM images.

Apple then manually reviews each report to confirm there is a match, disables the user’s account, and sends a report to NCMEC. If a user feels their account has been mistakenly flagged they can file an appeal to have their account reinstated.

Updated Siri and Search to provide guidance in unsafe online experiences

More intelligent Siri and Search will help parents and children in reporting CSAM or any other form of child exploitation encountered online.

For example, users who ask Siri how they can report CSAM or child exploitation will be pointed to resources for where and how to file a report.

Furthermore, Siri and Search will “intervene” when a user will involve in CSAM related searches by explaining that “interest in this topic is harmful and problematic, and provide resources from partners to get help with this issue.”

A smart device is not only a luxury but a necessity for the younger generation’s academic needs. Unfortunately, online access has given easy access to predators to approach children and exploit their vulnerable minds. Therefore, such protection barriers, as announced by Apple, will make it difficult for predators to engage with children.

However, a cryptography expert is concerned about the breach of users’ privacy via Apple’s new hashing system. He believes it can be exploited by miscreants and government agencies to spy on users.

15 comments

Comments are closed.