After delaying the launch of CSAM Detection for iCloud Photos, Apple has finally pulled the plug on the controversial safety feature. Wired reports that the tech company has now decided to focus on Communication Safety for Messages instead to prevent the generation of new CSAM (Child Sexual Abuse Material).

In 2021, Apple announced three new child safety features for all devices to ensure children’s online safety and stop the spread of CSAM: Safe Communication in Messages, CSAM Detection, and intelligent Siri and Search to offer guidance in unsafe online interactions.

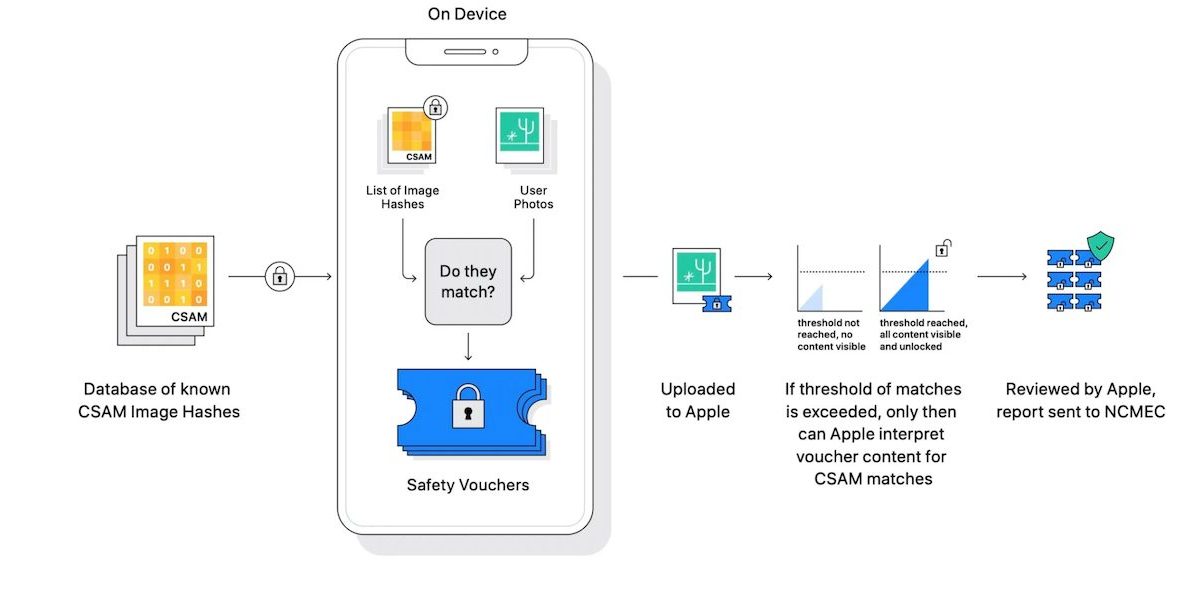

However, security and privacy expert immediately raised concerns regarding the potential misuse of the CSAM Detection for iCloud Photos feature which would have used a hashing system to scan and match users’ photos for known hashes provided by NCMEC while uploading on the cloud. Now, it is confirmed that CSAM Detection for iCloud Photos will never launch. The company said:

“After extensive consultation with experts to gather feedback on child protection initiatives we proposed last year, we are deepening our investment in the Communication Safety feature that we first made available in December 2021.

We have further decided to not move forward with our previously proposed CSAM detection tool for iCloud Photos. Children can be protected without companies combing through personal data, and we will continue working with governments, child advocates, and other companies to help protect young people, preserve their right to privacy, and make the internet a safer place for children and for us all.”

Apple to update Communication Safety for Messages with the ability to detect nudity in videos

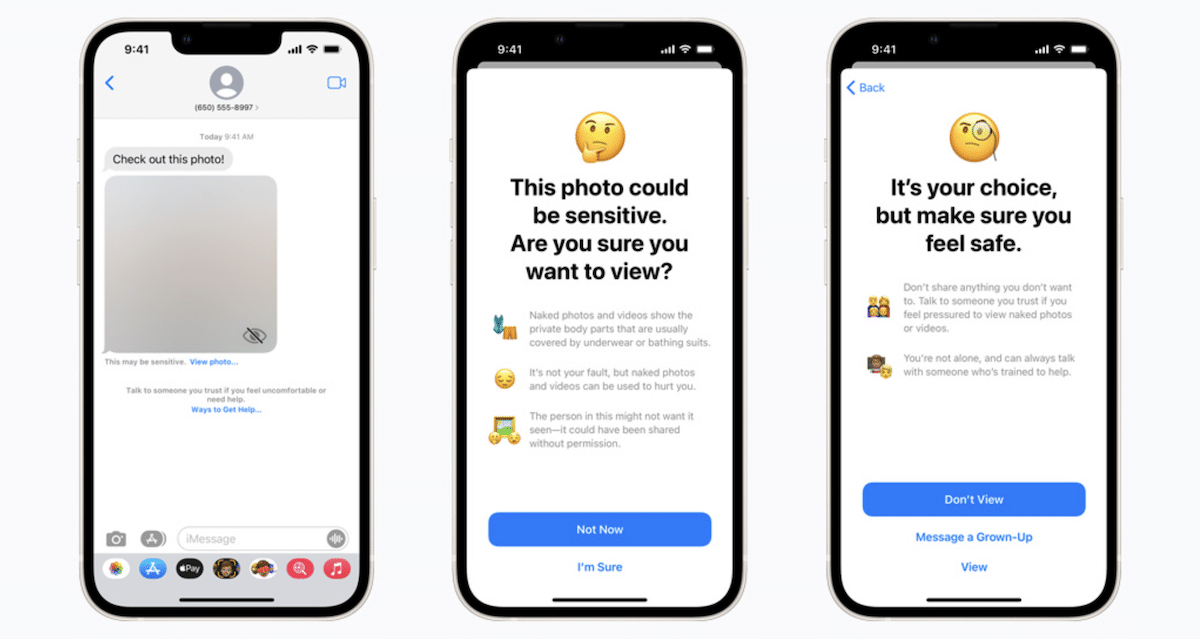

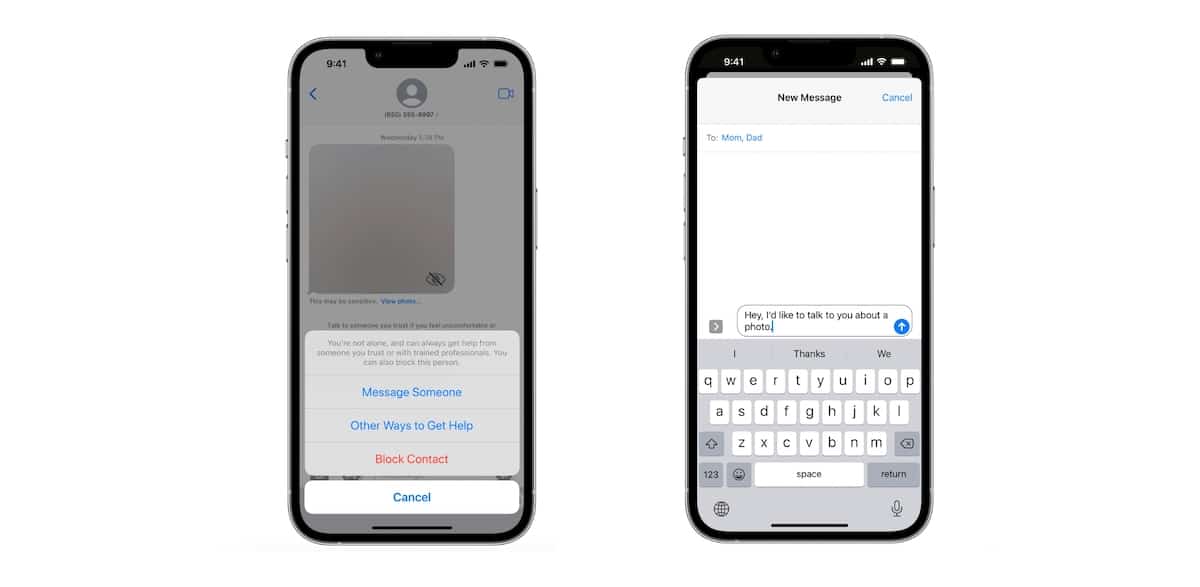

In iOS 15.2, Apple released Communication Safety in Messages which is an opt-in safety feature designed for parents or caregivers with Family Sharing Plan to protect young iPhone users from explicit content. When enabled, the feature analyzes images sent and received in the Messages app for nudity or CSAM.

If detected, the feature blurs the image and displays a warning that the image could be sensitive like showing private parts, can hurt, and could have been shared without the permission of the person in the image. The young recipient is also notified that it’s not their fault and that they can talk to a trusted person about the experience by sharing the message with a “grown-up”.

With more investment, the tech company is working on adding the detection of nudity or CSAM in videos shared via Messages or third-party messaging apps, when Communication Safety is enabled.

“Potential child exploitation can be interrupted before it happens by providing opt-in tools for parents to help protect their children from unsafe communications,” the company said in its statement. “Apple is dedicated to developing innovative privacy-preserving solutions to combat Child Sexual Abuse Material and protect children, while addressing the unique privacy needs of personal communications and data storage.”

Furthermore, the tech giant said that it also will continue to work with child safety experts to make it easier to report exploitative content to stop the spread of CSAM. Erin Earp, interim vice president of public policy at the anti-sexual violence organization RAINN said:

Scanning for CSAM before the material is sent by a child’s device is one of these such tools and can help limit the scope of the problem.

Currently, Communication Safety for Messages is available in the U.S., Canada, UK, Australia, and New Zealand.

Read More:

- How to easily turn on Communication Safety in Messages on iOS 15.2

- The child safety “Messages analysis system” will not break end-to-end encryption in Messages, says Apple

- A CSAM hashing error by Google lands a parent in trouble, raises more doubts about Apple’s CSAM Detection

- Digital rights group EFF urges Apple to abandon its CSAM plan entirely

- Apple confirms it scans iCloud Mail for CSAM but not iCloud Photos, yet