Apple has published an additional document that details the security measures it has implemented to prevent the misuse of the new ‘Expanded Protections for Children‘.

The Cupertino tech giant introduced three new child safety features to protect young users from pedophiles and prevent the spread of child pornography. However, two features have created a huge ruckus: CSAM detection, the iCloud Photos scanning system, and Communication Safety in Messages, the text messages the analysis system. Criticizing those systems, Privacy and cryptography experts argue that they have the potential to become tools of exploitation by governments to target individuals.

In a recent interview, Apple’s Head of Software Craig Federighi said that the new systems are auditable and verifiable and the new document explains how; especially the CSAM scanning process.

Apple elaborates the threat model considerations of Communication Safety in Messages and CSAM detection features

The document predominately differentiates between the two child safety features, to elaborate the difference in their functionality, scope, and impact. Here are the addressed privacy and security concerns of both scanning and analysis systems.

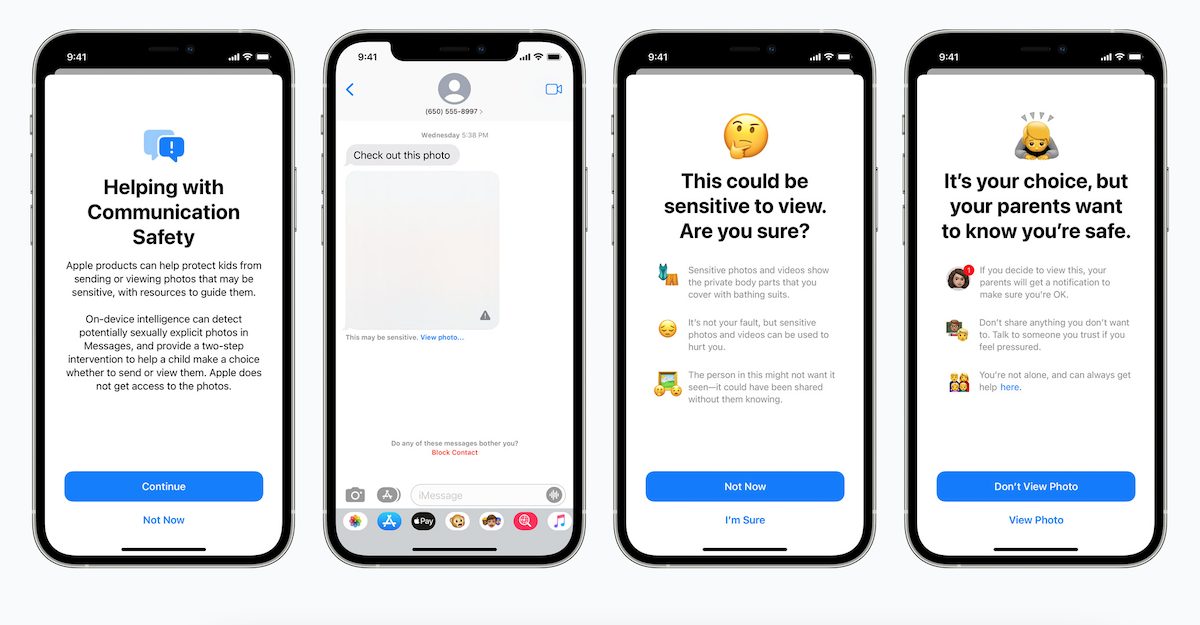

Communication Safety in Messages as an opt-in feature, all analyses and warnings are processed on the device and none of the information is sent to Apple or law enforcement.

Specifically, it does not disclose the communications of the users, the actions of the child, or the notifications to the parents. It does not compare images to any database, such as a database of CSAM material. It never generates any reports for Apple or law enforcement.

The feature can only be activated for children’s accounts by parents/ guardians via Family Sharing, “it is not enabled for such child accounts by default.” Even if the feature is maliciously activated for an adult account holder, the information will not leave the device.

If the feature were enabled surreptitiously or maliciously – for example, in the Intimate Partner Surveillance threat model, by coercing a user to join Family Sharing with an account that is configured as belonging to a child under the age of 13 – the user would receive a warning when trying to view or send a sexually explicit image

[…]If they declined to proceed, neither the fact that the warnings were presented nor the user’s decision to cancel, are sent to anyone.

In case of false positives for a child account with notifications features enabled, users will see non-sexual content and since “the photo that triggered the notification is preserved on the child’s device, their parents can confirm that the image was not sexually explicit.”

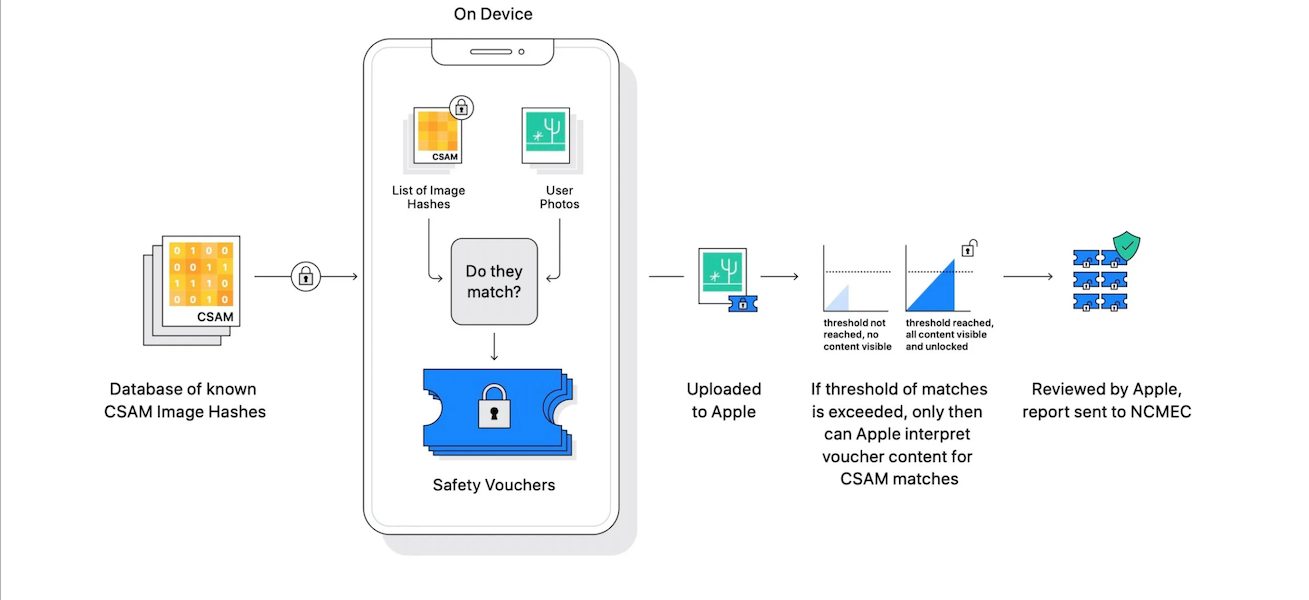

CSAM detection is an exclusive part of the “cloud storage pipeline for images being uploaded to iCloud Photos and cannot act on any other image content on the device.”

- Generation of the perceptual CSAM hash database is not possible because Apple does not create the CSAM database, it uses the one provided by NCMEC. And the two strong protections against the risk of including non-CSAM images are the company’s use of an intersection of hashes provided by two-child safety organizations and human review.

- Distribution of the perceptual CSAM hash database is not possible because the company pushes the updates of the database to all users globally. Therefore, “it is not possible – inadvertently or through coercion – for Apple to provide targeted users with a different CSAM database. This meets our database update transparency and database universality requirements.” Furthermore, the company will publish a Knowledge Base article containing a root hash of the encrypted CSAM which will be open to third-party technical audits.

- Matching against the perceptual CSAM hash database will be prevented by the NeuralHash algorithm which is designed to “answer whether one image is really the same image as another, even if some image-altering transformations have been applied (like transcoding, resizing, and cropping).” And it kicks in when the match threshold and cloud storage code invoke “NeuralHash to perform blinded matching on images being uploaded to iCloud Photos are all included as part of the code of the signed operating system.”

- Match voucher decryption by iCloud Photos servers is only activated when the match threshold is exceeded.

To make sure Apple’s servers do not have a count of matching images for users below the match threshold, the on-device matching process will, with a certain probability, replace a real safety voucher that’s being generated with a synthetic voucher that only contains noise. This probability is calibrated to ensure the total number of synthetic vouchers is proportional to the match threshold. Crucially, these synthetic vouchers are a property of each account, not of the system as a whole. For accounts below the match threshold, only the user’s device knows which vouchers are synthetic; Apple’s servers do not and cannot determine this number, and therefore cannot count the number of true positive matches.

- Human review and reporting will only be initiated when the match threshold exceeds. Firstly the positive matching will be proceeded by independent hash and on confirmation are sent for human review. Once the human reviewers confirm visual derivatives are CSAM, the account will be disabled and the user will be reported to “the child safety organization that works with law enforcement to handle the case further.”

Although the new document reiterates the explanations given by the company privacy and software heads, it directly addresses the critics’ fear that governments would not be able to spy on targeted individuals by injecting non-CSAM hashes because the hash database will be the same for ALL users. You can read the complete document here, and we will let you know if critics are convinced by Apple’s security measures.

1 comment