A report by Ben Lovejoy of 9to5Mac claims that Apple has confirmed that it scans users’ iCloud Mail for CSAM but not iCloud Photos and iCloud backup. After the company’s anti-fraud chief Eric Friedman said that Apple was “the great platform for distributing child porn,” Lovejoy initiated an inquiry wanting to know what data was the claim based on.

As emails are not encrypted, Apple’s scans them for CSAM

Since 2019, the Cupertino tech giant has been scanning iCloud Mail for CSAM as it is not encrypted. The company also confirmed that it scans other undisclosed data for child pornography which does not include iCloud backups. Without commenting on Friedman’s statement, Apple assured Lovejoy that it has never scanned iCloud Photos. This has led the writer to conclude that Friedman’s statement but not be backed by data.

Although Friedman’s statement sounds definitive – like it’s based on hard data – it’s now looking likely that it wasn’t. It’s our understanding that the total number of reports Apple makes to CSAM each year is measured in the hundreds, meaning that email scanning would not provide any kind of evidence of a large-scale problem on Apple servers.

As the company’s head of Software Craig Federighi said that other cloud services already scan users’ photos and Apple does not, Lovejoy tried to rationalize Friedman’s statement.

The explanation probably lays in the fact that other cloud services were scanning photos for CSAM, and Apple wasn’t. If other services were disabling accounts for uploading CSAM, and iCloud Photos wasn’t (because the company wasn’t scanning there), then the logical inference would be that more CSAM exists on Apple’s platform than anywhere else. Friedman was probably doing nothing more than reaching that conclusion.

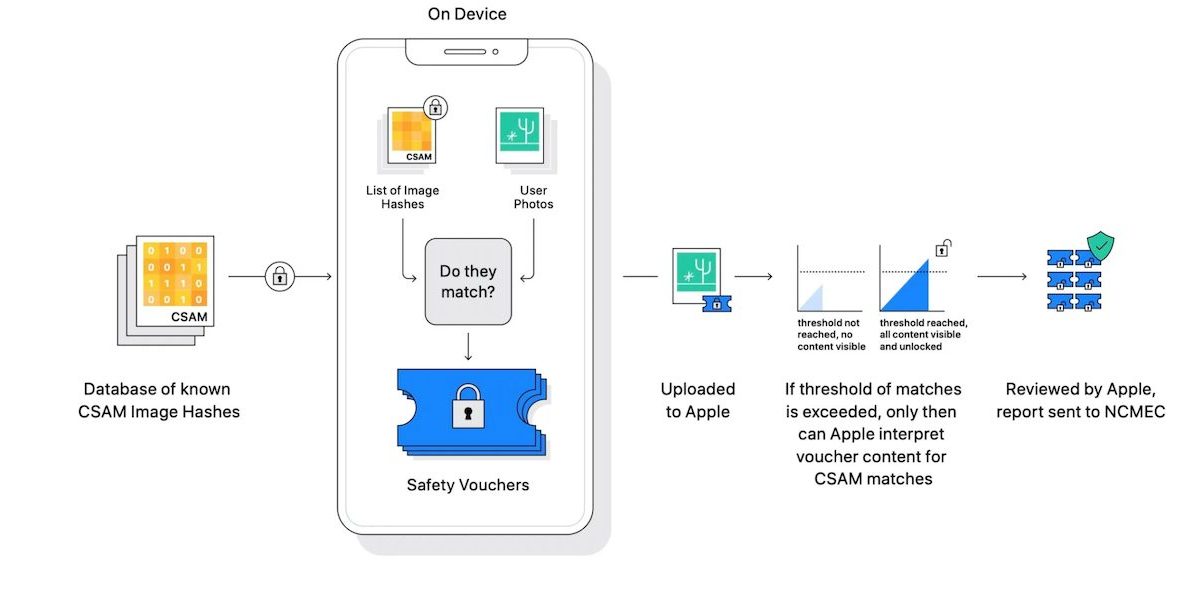

Scanning for Child Sexual Abuse Material (aka CSAM) is one of Apple’s upcoming Child Safety features. Called CSAM detecting, the upcoming feature is powered by a NeuralHash system to scan users Photos as they are uploaded on the cloud for CSAM. When an account would exceed the 30 CSAM images threshold, then it will be flagged for human review to judge the matched images for CSAM. If the threshold was trigger by false positives, the reviewer would do nothing. But, if the flagged material is child porn, then the account would be suspended and the user will be reported to concerned authorities.

Although the feature is designed to prevent the spread of CSAM, the new hashing system has raised multiple concerns of possible exploitation. Critics claim that it would turn iPhones into surveillance devices for governments. Recently, based on their personal work experience, two Princeton University researchers opposed it by saying that the new CSAM detection system is flawed. For now, the new Expanded Protections for Children are scheduled to release later this year in iOS 15, iPadOS 15, and other updates across devices.

Read More:

1 comment

Comments are closed.