Apple recently debuted iOS 17 and iPadOS 17 with a bunch of new privacy and security features for iPhone and iPad. In a new interview with Fast Company, Apple’s senior vice president of Software Engineering Craig Federighi discussed new privacy features, artificial intelligence, deepfakes, and more coming in iOS 17.

Craig Federighi on iOS 17 Check-In, Lockdown Mode improvements, and more

Check-In

During WWDC 2023, Apple introduced a new feature for iOS 17 called Check-In. This feature will allow iPhone users to select specific contacts who will receive automatic notifications when the user arrives home, offering a sense of reassurance.

However, Check In goes further by actively monitoring the user’s progress. For instance, if the user stated they would be home by midnight but it’s already 11:50 p.m. and they are still far away, Check In will reach out to the user to ensure their well-being.

When discussing iOS 17’s Check In, Federighi highlighted the significance of this feature, particularly for individuals who may feel insecure when walking home or moving between locations.

“There are so many people who have said [they] feel a little insecure when they’re walking home from dinner, walking from the library to their dorm,” Federighi tells me. Check In is a way “that we can provide some level of comfort and security for a large number of people.”

If the user fails to respond, Check In will send a message to the chosen contacts, sharing the user’s precise location, cell service status, iPhone battery level, and the last time the device was actively used.

Federighi went on to talk also about Crash Detection, which was introduced with iOS 16. The impact of Crash Detection became evident when Apple received numerous letters from individuals involved in car crashes shortly after its release. Federighi expressed surprise at the volume of incidents, realizing the significance of the iPhone in helping users receive prompt assistance.

“When we shipped Crash Detection, I was amazed [by] how many letters we got, within days, from people who had been in car crashes. I was like, “Oh, my God, how many cars crash in a day?” The answer turns out to be quite a few,” Federighi says. The people involved in the crashes “were confused and discombobulated. Maybe Crash Detection helped them get help a little earlier. In some cases, it saved their lives. It’s been a real eye-opener for us and helps us realize how much we can help.”

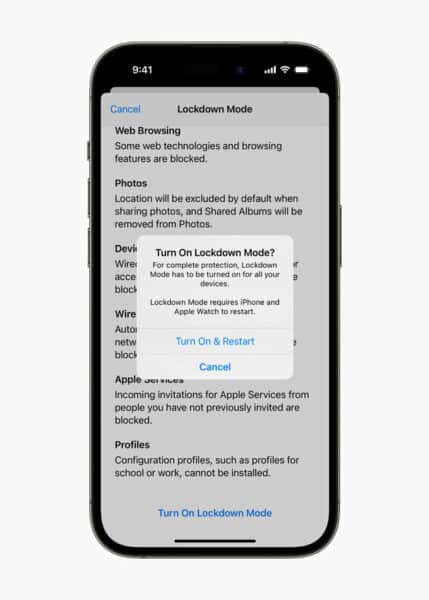

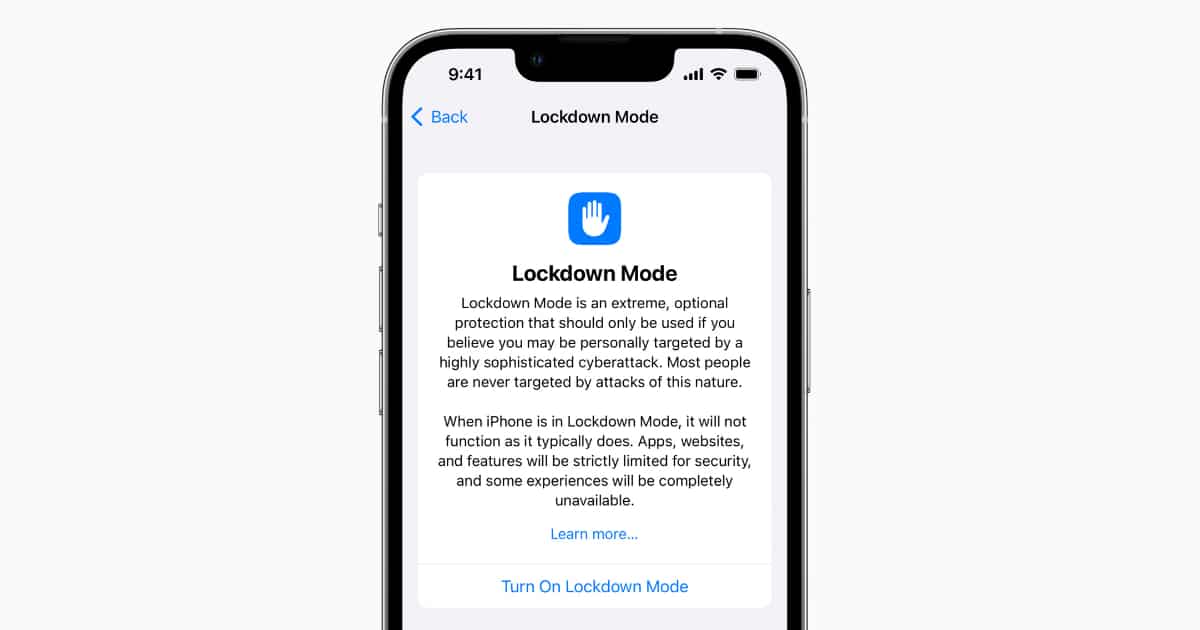

Lockdown Mode

Last year, Apple introduced Lockdown Mode for iPhone, iPad, and Mac, enabling users to disable various device features and services to counter potential hacking attempts. Apple acknowledges that the majority of users will likely never need to activate Lockdown Mode. However, Federighi recognized that a specific group of iPhone users, including journalists, activists, and government officials, are already targeted by these sophisticated attacks. Their vulnerability may increase if governments fail to impose legal restrictions on spyware tools like Pegasus.

“You have a class of users who may have real reason to believe that they could be targeted. For them, we can take advantage of a real asymmetry. Normally, attackers are looking across every surface area of code in the operating system looking for chinks in the armor, a narrow path through,” Federighi explains. “With Lockdown Mode, we can close the bulk of the access to those surfaces,” making attacks “much more expensive” to carry out “and much less likely to be successful.”

In response, Apple is bolstering Lockdown Mode in iOS 17 by implementing additional safeguards, such as blocking iPhone connectivity to 2G cellular networks and preventing auto-joining of insecure wireless networks. Furthermore, Apple is introducing Lockdown Mode to the Apple Watch for the first time with iOS 17 and watchOS 10, extending its protection to a broader range of devices.

AI and deepfakes

During the discussion on threats, Federighi was asked to share his perspective on AI and its implications for privacy and security. It appeared that Federighi had devoted considerable thought to this matter.

Federighi acknowledged that AI-based tools would undoubtedly improve their ability to identify potential security vulnerabilities and exploitable paths. He pointed out that these tools can be utilized not only by individuals seeking to strengthen code and protect users but also by those attempting to exploit vulnerabilities.

In essence, AI would benefit not only malicious actors but also those working to enhance security measures. Federighi mentioned that Apple already uses various static and dynamic analysis tools to detect potential code defects that may be challenging for humans to identify.

However, Federighi expressed concerns regarding the human factor when it comes to privacy and security. Specifically, he is worried about the rise of deepfakes, AI-generated audio, and video that can convincingly depict individuals saying or doing things they never did.

As AI tools become more accessible, deepfakes could be increasingly utilized in social engineering attacks, where attackers deceive victims into revealing valuable data by impersonating someone familiar.

“When someone can imitate the voice of your loved one,” he says, spotting social engineering attacks will only become more difficult. If “someone asks you, ‘Oh, can you just give me the password to this and that? I got locked out,’ ” and it literally sounds like your spouse, that, I think, is going to be a real threat.” Apple is already thinking through how to defend users from such trickery. “We want to do everything we can to make sure that we’re flagging [deepfake threats] in the future: Do we think we have a connection to the device of the person you think you’re talking to? These kinds of things. But it is going to be an interesting time,” he says, and everyone will need to “keep their wits about them.”

In summary, while AI holds promise in enhancing security measures, Federighi highlighted the potential risks associated with the proliferation of deepfakes and the challenges they pose in detecting social engineering attacks.

Read the full interview here.