New York Times report that an incident of CSAM hashing by Google led to legal action against an innocent parent of a sick child in 2021, during the COVID-19 lockdown. This case has raised more questions about the reliability and accuracy of Apple’s CSAM detection feature and the hashing system altogether.

Child Sexual Abuse Material (CSAM) is used by criminals to groom young children and spread online for child pornography and sex trafficking purposes. To prevent the dissemination of CSAM, tech companies use a neural hashing system that scans users’ photos to identify child sexual abuse material against hashes provided by the National Center for Missing and Exploited Children (NCMEC) in the U.S. and take necessary legal actions when the material is detected.

Google launched the Content Safety API AI toolkit in 2018 on its services to “proactively identify “never-before-seen CSAM imagery so it can be reviewed and, if confirmed as CSAM, removed and reported as quickly as possible.” As well-intentioned as the system is, it landed a concerned parent in hot water with law enforcement for simply getting a doctor’s consultation, a problem that privacy advocates have been warning about.

Google’s flawed CSAM hashing strengthens experts’ argument against Apple detection tech

According to the report, the accused parent Mark noticed swelling in his son’s genital region and took photos of his son upon the nurse’s request for a video consultation with a doctor because the offices were closed in February 2021 due to the COVID-19 pandemic.

Two days after taking the photos, Mark was notified by Google that his accounts had been locked for storing “harmful content” which violated the company’s policies and might be illegal. Mark’s phone number, contacts, photos, and emails were locked.

Although Mark tried to appeal the decision, he was reported to the authorities and San Franciso Police department launched an investigation to probe the matter. As per The Verge, “Google reported 621,583 cases of CSAM to the NCMEC’s CyberTipLine, while the NCMEC alerted the authorities of 4,260 potential victims, a list that the NYT says includes Mark’s son” in 2021.

The ordeal which began with a flawed hashing, ended when the investigator found that Mark was innocent and the incident “did not meet the elements of a crime and that no crime occurred.” This is exactly the nightmare for parents and other users’ that several privacy experts have been warning about. Innocent pictures of children playing in the bathtub, or at the pool can also be hashed with CSAM.

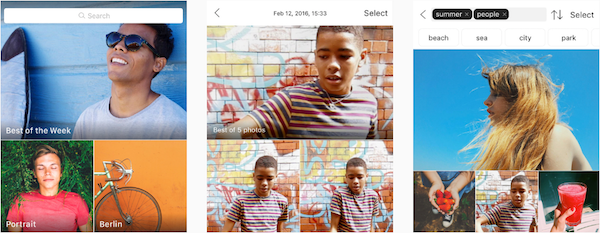

When Apple announced its CSAM Detection feature which would scan users’ Photos and compare them with child sexual abuse material hashes provided by NCMEC before uploading them on iCloud. When the hashing system reaches the threshold of 30 images, the company would refer the account for human review and take punitive actions if required.

However, a developer reverse-engineered Apple’s iOS 14.3 update to harvest the detection system and highlighted that the hashing system was flawed. After backlash from journalists, security experts, and others, Apple has delayed the feature for the time being.

So, it wasn’t “hashing” that caught this, rather it was AI. Hashing involves applying an algorithm to data, resulting it the return of a fixed value for a specific data set, much like a fingerprint. Known videos, photos, etc of CSAM are hashed and the value stored in databases. YOUR photos are hashed and the resultant value compared to known hashes to determine if you are in possession of previously identified CSAM. This mechanism wouldn’t have identified these photos as they were unique originals created by the unfortunate victim. However, I guarantee you the hashes of these videos, photos, etc, are now stored in a database for future identification.

What identified these photos was AI software designed to analyze photos and trained by exposure to child porn to supposedly be able to identify it versus adult porn.

Welcome to guilty even when proven innocent.