EU Commission has proposed new laws to combat and prevent the dissemination of child sexual abuse material (CSAM) online. The authority will force providers will Apple, Google, and others to detect, report, and remove CSAM from the platforms.

In August 2021, Apple announced “Expanded Protection for Children” which included three key features:

- Communication Safety in Messages – an opt-in feature for parents to enable on-device scanning of all images sent or received in the Messages app for CSAM or sexually explicit content. On detection of exploitive material, the feature warns children and suggests resources to seek help.

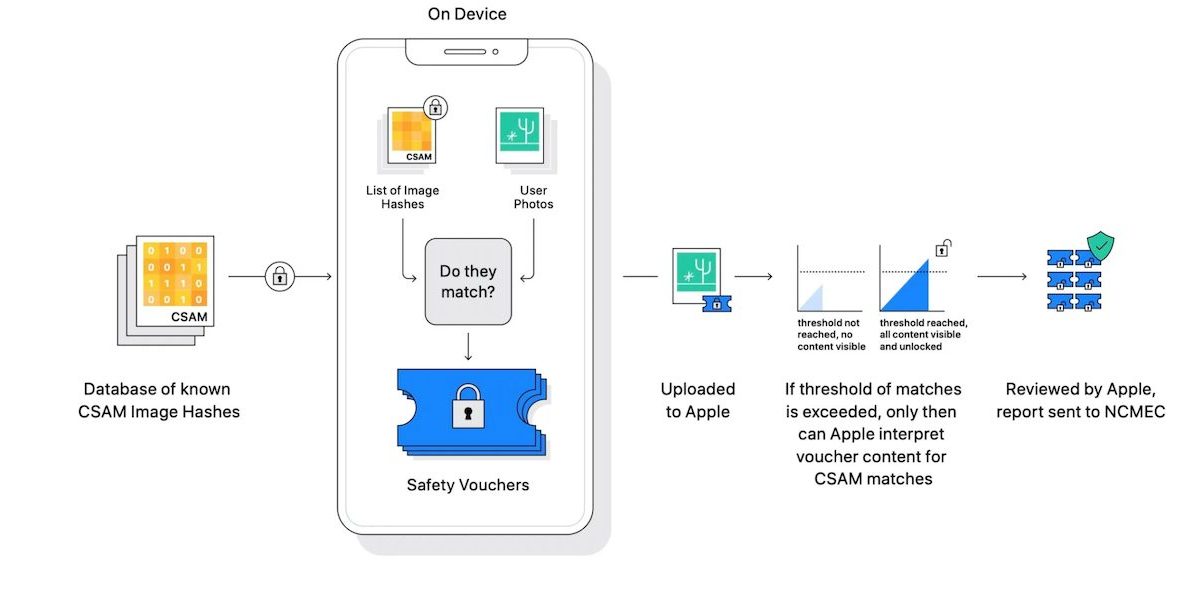

- CSAM Detection in iCloud photos through on-device matching based on known CSAM database for known CSAM image hashes provided by NCMEC.

- Intelligent Siri and Search to intervene in CSAM-related searches, and help users report CSAM and other types of child exploitation encountered online.

Out of the three features, CSAM Detection was criticized the most by cybersecurity experts, researchers, and civil rights groups who argued that the feature will create a backdoor for law enforcement agencies and authoritarian governments to spy on users and turn more than a billion iPhones into surveillance devices.

After widespread backlash, the tech giant decided to delay the CSAM Detection feature to address concerns that it could be repurposed for surveillance and censorship. However, EU Commission could now force Apple to bring CSAM detection into effect.

Although Apple already scans iCloud Mail for CSAM, it does not scan iCloud Photos for child sexual abuse material. This means Apple will have to figure out how it can comply with the new EU rules and avoid controversy at the same time.

EU Commission accused of working to remove end-to-end encryption protection under the pretext to combat CSAM online

The new “Proposal for a Regulation laying down rules to prevent and combat child sexual abuse” has a three-point agenda:

- Effective detection, reporting, and removal of online child sexual abuse

- Improved legal certainty, ensuring the protection of fundamental rights,

transparency and accountability - Reduction in the proliferation and effects of child sexual abuse through increased

coordination of efforts

However, cybersecurity experts are raising the same concerns as they did with Apple. Matthew Green who accurately leaked and criticized Apple’s CSAM detection system is calling out the EU’s ulterior motive to remove end-to-end encryption protection. He wrote that:

Speaking of actual free speech issues, the EU is proposing a regulation that could mandate scanning of encrypted messages for CSAM material. This is Apple all over again.

This document is the most terrifying thing I’ve ever seen. It is proposing a new mass surveillance system that will read private text messages, not to detect CSAM, but to detect “grooming”.