The Chairman of the Digital Agenda committee in Germany has penned a letter to Apple’s CEO Tim Cook, asking him to “crush” the launch of the Child Sexual Abuse Material (CSAM) detection feature. The committee fears that the scanning capability could undermine “secure and confidential communication”, and would turn the internet into a surveillance tool.

Earlier, a Union of Journalists from Germany, Austria, and Switzerland urged the EU commission and Austrian and German federal interior ministers to take action against the release of Apple’s upcoming CSAM detection feature in Europe. The Union expressed the same concerns as the German agency, that the new scanning system would threaten the freedom of the press.

German parliamentarians reject the CSAM detection system, calling it the biggest breach of users trust

Based on the report of EFF, a cybersecurity organization, the German parliamentarians are wary of the upcoming CSAM detection system as part of its new child safety features in the iOS 15 update. Although Apple will launch the features in the United States only, the company does plan to gradually release them globally on a country-to-country basis.

Therefore, the German parliamentarians wrote to Tim Cook to share their concerns and also to warn of ‘foreseeable problems” for the company, if the feature is pushed in Europe. As per heise.de the letter stated:

The planned CSAM scanning is the “biggest breach of the dam for the confidentiality of communication that we have seen since the invention of the Internet,” writes the chairman of the committee Manuel Höferlin (FDP) in a letter sent to Apple boss Tim Cook on Tuesday became. Every scanned content ultimately destroys the trust of users that their communication is not monitored. Without confidential communication, however, the Internet would become “the greatest surveillance instrument in history”.

Apple’s promise of a narrow limitation of functions could not change that, emphasized Höferlin. Even a narrow back door ultimately remains a back door, writes the member of the Bundestag, referring to the civil rights organization EFF, which had already sharply criticized Apple’s planned functions. Requests to expand the scanning function to other content are foreseeable – and Apple could lose access to large markets by rejecting such requests.

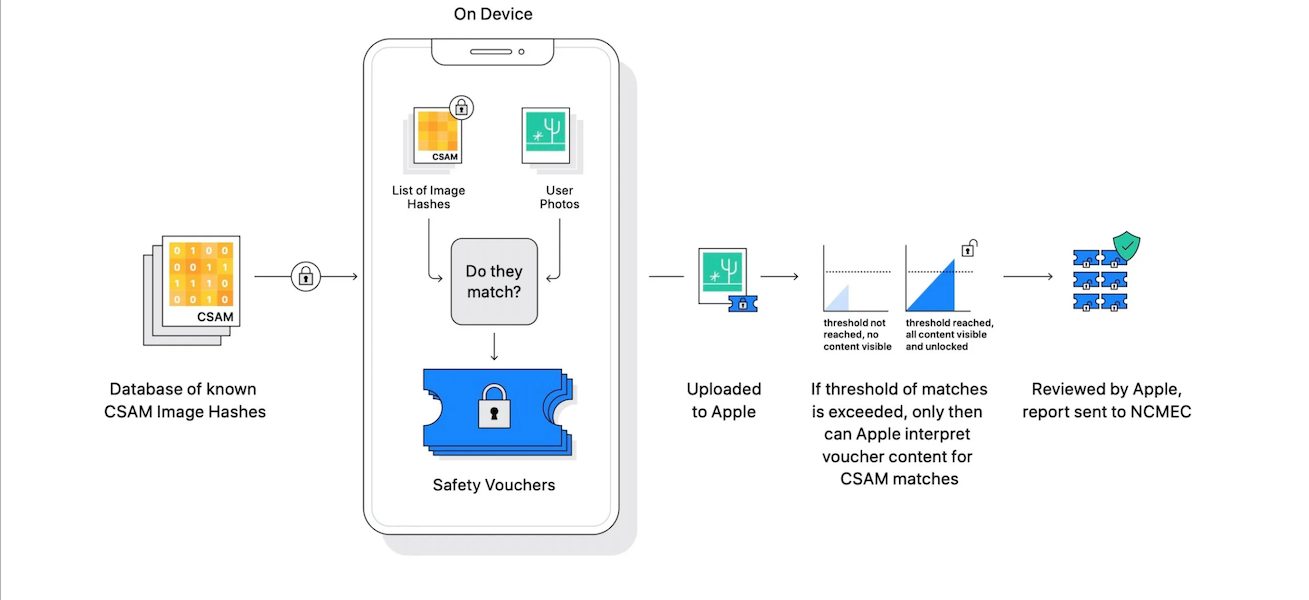

Since the announcement of the new Expanded Protections for Children, Apple’s heads of Software and Privacy have been clarifying the misconception that “Apple is scanning my phone for images” and the company has also released documents detailing the functionality of the new systems and the implemented protective layers to prevent exploitation. But as Craig Ferderighi pointed out that the early soundbite that got out has created a lot of confusion.

Read More:

- The new ‘Corellium Open Security Initiative’ aims to test Apple’s CSAM detection related privacy and security claims

- The new CSAM detection feature will not scan users’ private iPhone photo library, says Apple

- Apple could expand new child safety features to third-party apps in the future, possibly in the form of an API