The Irish Data Protection Commission (DPC) has imposed a €405 million ($403 million at the current exchange rate) fine on Instagram for violation of children’s privacy. The huge penalty was set at the resolution of a long-running complaint regarding the company’s handling of children’s data.

Politico reports that Instagram was found guilty of breaching young users’ privacy by publishing their email addresses and phone numbers which is a violation of the General Data Protection Regulation (GDPR).

This is the second-highest amount in fine levied by the regulatory authority; in 2021 Amazon was fined €746 million. And the highest penalty for Instagram’s parent company Meta, €225 million fine for WhatsApp and a €17 million fine for Facebook.

Instagram’s data handling of children’s accounts violates the EU’s General Data Protection Regulation

According to TechCrunch, the complaint focused on Instagram’s processing of children’s data for business accounts and children’s accounts set to “public” by default, until it was changed to “private” by the user. Both practices violate GDPR rules.

The GDPR contains strong measures requiring privacy by design and default generally — as well provisions aimed at enhancing the protection of children’s information specifically and ensuring that services targeting kids are living up to transparency and accountability principles (such as by providing suitably clear communications that children can understand).

Ireland’s DPC confirmed the fine to TechCrunch and said that full details would be published in the coming week. The decision was reached in collaboration with the European Data Protection Board (EDPB) which is responsible for coordinating a decision-review process with other EU data protection authorities.

In a statement to Politico, Meta’s spokesperson said that the company has implemented several changes since the launch of the investigation for young children and teens on the platform and it is reviewing the final decision.

“This inquiry focused on old settings that we updated over a year ago, and we’ve since released many new features to help keep teens safe and their information private. Anyone under 18 automatically has their account set to private when they join Instagram, so only people they know can see what they post, and adults can’t message teens who don’t follow them. We engaged fully with the DPC throughout their inquiry, and we’re carefully reviewing their final decision.”

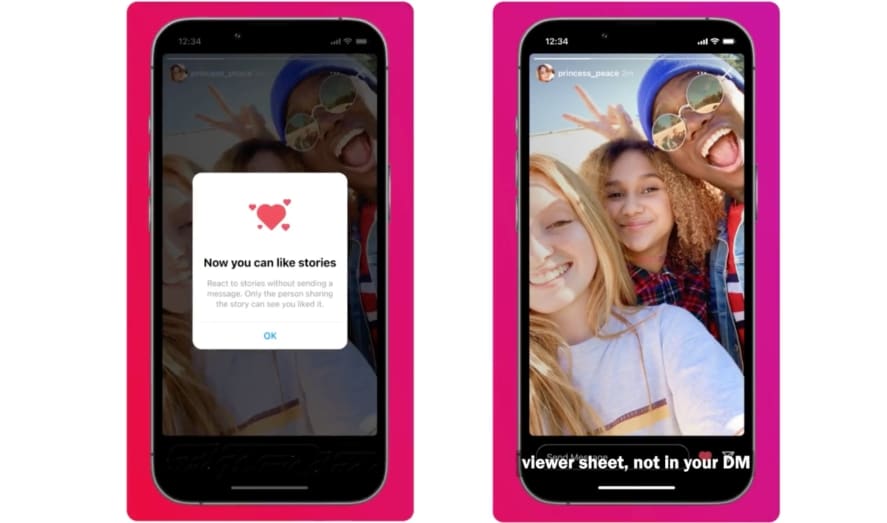

Recently, Instagram enabled ‘Sensitive Content Control’ by default for users under 16 to protect them from sensitive content and previously added a new Parents Guide, AI and ML technologies for age verification, restricting Direct Messages between young users and adults, safety notices, and more for youth safety.

The social media company is also facing an investigation by the U.S. Attorneys Generals over the app’s impact on children’s mental and physical health and safety. The DPC is conducting six more investigations into Meta-owned companies which means more fines might follow if the platforms are found guilty.

Earlier this year, Meta threatened to pull out Facebook, Instagram, and its other services from Europe, if it barred from transferring EU users’ data to its US servers which did not garner a favorable response. Two politicians said that life would be better without Facebook and they won’t be intimidated by big tech companies like Meta.