To our shock, Reddit user @ibreakphotos has tested and proved that Samsung Galaxy’s famous moon shots captured with its Space Zoom camera feature are fake.

Starting with Galaxy S20 and S20 Ultra, Samsung introduced Space Zoom to take super-detailed moon shots from the earth using periscope zoom. The feature captures high-resolution images at up to 100x zoom of objects and people at a great distance like the moon.

Often in the Samsung vs. Apple debate, the Space Zoom feature is used to show that the camera system on iPhone lacks the innovation offered in the compatible Galaxy S-series models.

In his review of the Galaxy S23 Ultra, YouTuber MKBHD proclaimed that the folded periscope zoom that combines 100x optical and digital zoom on the smartphone captured “ridiculous” images of the moon with details. He explained that the images were not an “overlay or fake thing” of which Huawei has been accused and Apple was years away from offering the tech in iPhones.

However, it appears the Space Zoom moon shots are a hoax.

Samsung’s AI delivers super detailed Space Zoom moon shots, not its periscope zoom

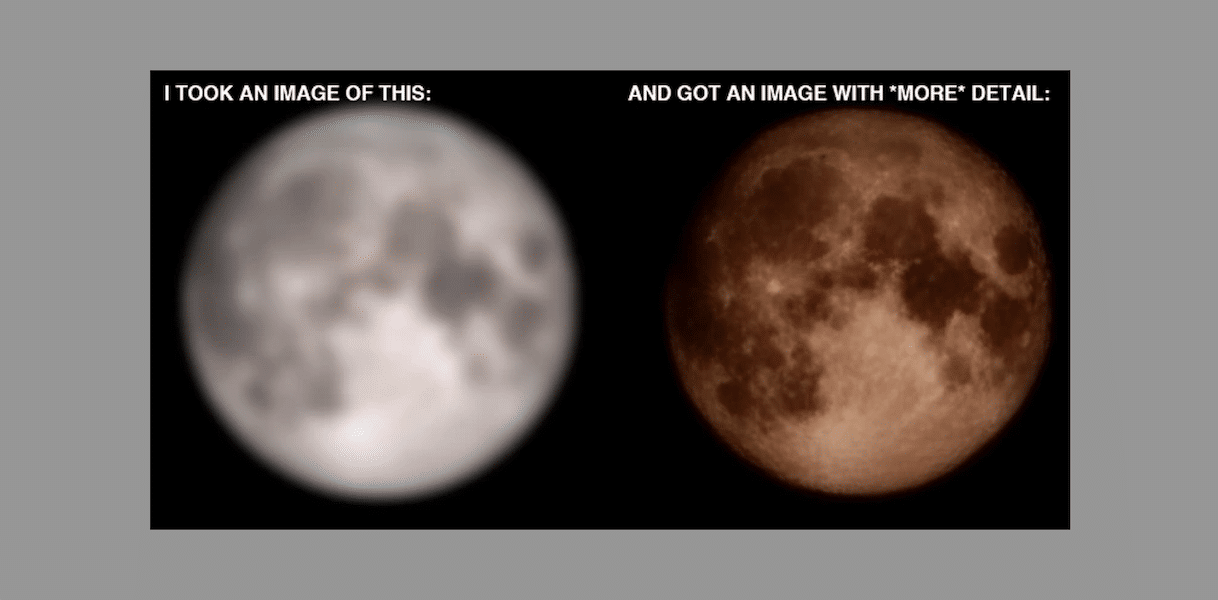

For the test, Redditor downloaded a high-resolution Space Zoom moon image and downsized it to 170×170 pixels. When the gaussian blur was applied to the edited image, it removed all the details suggesting that the information was never there.

The first experiment revealed that Samsung uses a specially trained AI model designed exclusively to recognize hundreds of images of the moon and “slap” details on them.

In the side-by-side above, I hope you can appreciate that Samsung is leveraging an AI model to put craters and other details on places which were just a blurry mess. And I have to stress this: there’s a difference between additional processing a la super-resolution, when multiple frames are combined to recover detail which would otherwise be lost, and this, where you have a specific AI model trained on a set of moon images, in order to recognize the moon and slap on the moon texture on it (when there is no detail to recover in the first place, as in this experiment). This is not the same kind of processing that is done when you’re zooming into something else, when those multiple exposures and different data from each frame account to something. This is specific to the moon.

After the first verdict that the moon pictures from “Samsung are fake and its marketing is deceptive. It is adding detail where there is none (in this experiment, it was intentionally removed)”, the Redditor ran another test which was suggested by a commenter.

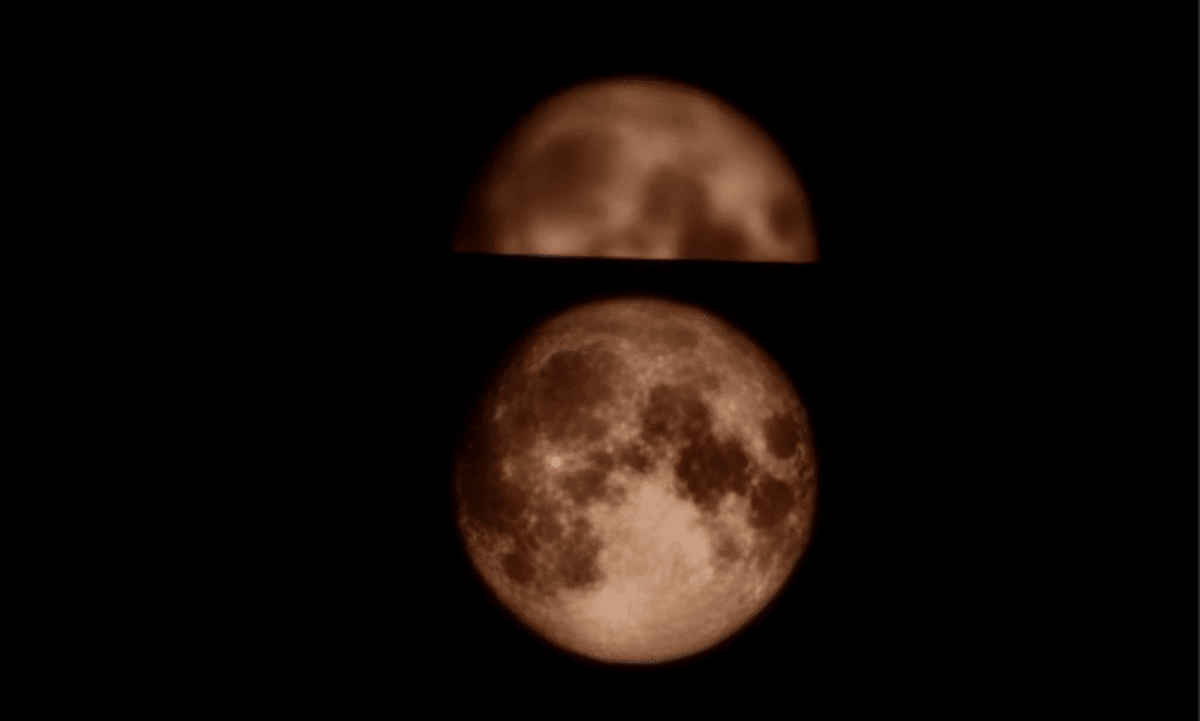

In the second experiment, two images of a blurred moon were placed side by side to trick Samsung’s AI into adding details on one and leaving the other as it was. Without disappointment, the AI on Galaxy S23 Ultra did just that. The second test further solidified the first conclusion.

It’s literally adding in detail that weren’t there. It’s not deconvolution, it’s not sharpening, it’s not super resolution, it’s not “multiple frames or exposures”. It’s generating data.