Today, Snapchat confirmed that it will be one of the first services to put the iPhone 12’s LiDAR to use in its iOS app for new augmented reality Lens. The social media app gained popularity because of its variety of stickers, filters, AR effects to transform users’ photos and videos into creative and expressive projects. And now Snapchat is taking that experience to the next level with the new iPhone 12 Pro and Pro Max LiDAR Scanner.

Announced at the October 13 event, the high-end models of the new iPhone 12 lineup are equipped with a new LiDAR Scanner which is designed to deliver an immersive augmented reality (AR) experience like the current 2020 iPad Pro.

Snapchat Embraces iPhone 12 Pro’s LiDAR Scanner

Snapchat is already known for some best-in-class AR photo filters, it could lead to a whole new AR experience. The company says it will soon launch a LiDAR-powered lens specifically for the iPhone 12 Pro models.

As Apple explained during its virtual event, the LiDAR (Light Detection And Ranging) Scanner measures how long it takes for light to reach an object and reflect back. LiDAR Scanner helps the iPhone to understand the users’ surroundings as well as offers new learning capabilities and development frameworks. Apple is utilizing the AR scanner technology in the camera system of the iPhone 12 Pro and Pro Max models, which drastically improves low-light photography, thanks to its ability to ”see in the dark”.

App developers can use LiDAR technology to perform tasks like scanning rooms, measuring spaces, placing objects, and much more. This technology unlocks new and innovative opportunities like better AR shopping apps, home design tools, AR games, and much more.

It can also enable more expressive photo and video effects and a more accurate placement of AR objects, as the iPhone is actually able to ‘see’ a depth map of the room.

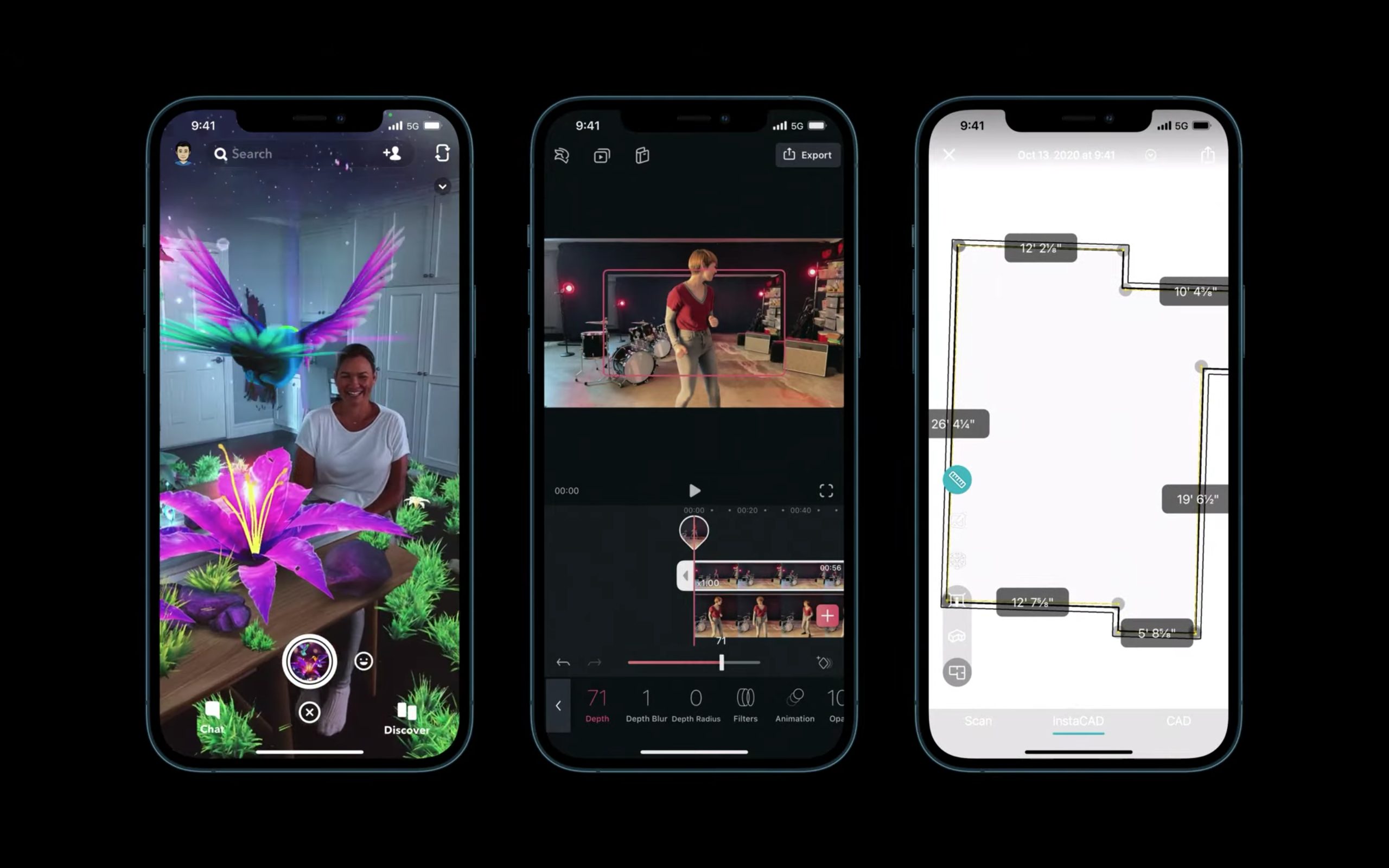

During the event, Apple gave a brief peek at Snapchat’s LiDAR-powered features. In which, an AR Lens in the Snapchat app where flowers and grasses cover the table and floor, and birds fly toward the user’s face. The grasses toward the back of the room looked as if they were further away than those closer to the user, and vegetation was even climbing up and round the kitchen cabinets — a hint that it saw where those objects were in the physical space.

It is somewhat clear that this is the exact Lens Snapchat has in the works, but the company is holding further details for the time being. However, it shows what a LiDAR-enabled Snapchat experience would feel like.

The Snapchat filter in action can be seen at 59:41 in the Cupertino giant tech’s event, ‘Hi, Speed’.

via TechCrunch

8 comments