Apple has updated its Vision framework technology to detect hand and body gestures in camera app on iOS 14 and macOS Big Sur. At WWDC20 in June, engineer Brett Keating explained to developers that the Vision framework will help their apps to “detect” body movements and hand gestures in videos and photos. He emphasized that the detection technology will help developers to create more interactive apps and editing tools with the ability to analyze users’s body language and actions via updated Vision Framework APIs.

The description of the updated technology reads:

“Explore how the Vision framework can help your app detect body and hand poses in photos and video. With pose detection, your app can analyze the poses, movements, and gestures of people to offer new video editing possibilities, or to perform action classification when paired with an action classifier built in Create ML”.

New APIs in Vision Framework

Keating said that the new detection technology is designed to help to developers better understand people in visual data to improve users experience by delivering more interactive, versatile and revolutionary apps. The new APIs in Vision Framework are easily detect human hand pose and human body pose from Vision Framework.

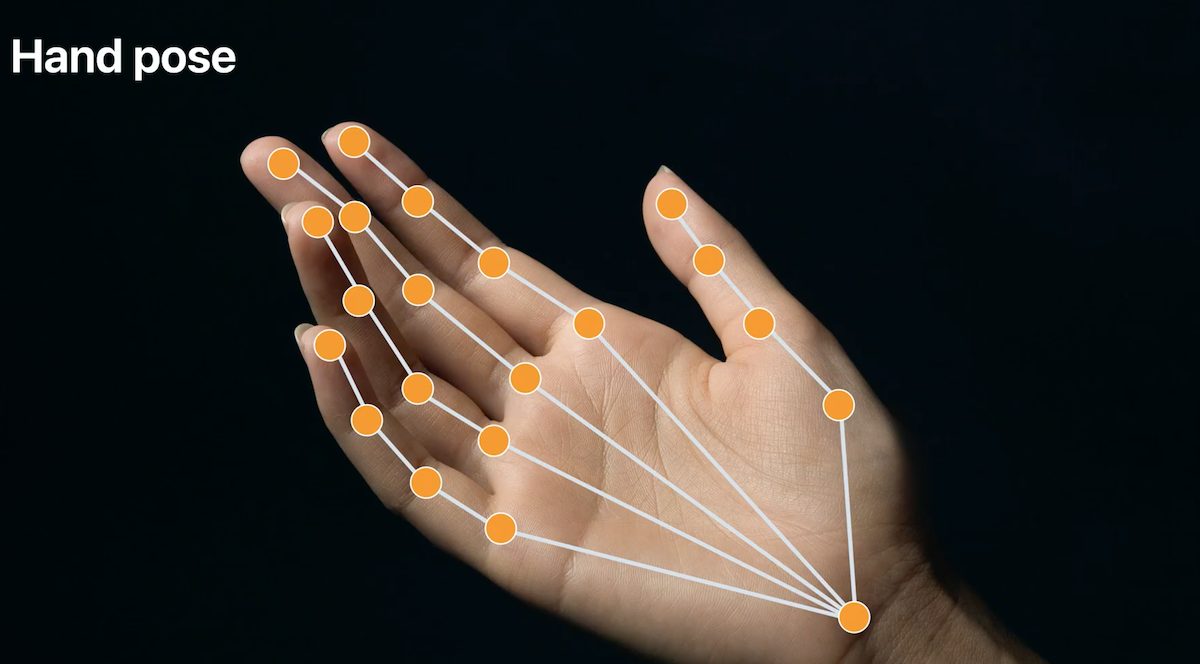

Hand Pose

The hand pose detection technology will enable developers to design apps which will allow users to interact with their screen without a stylus or touching it. The following are some applications of the feature:

- Certain hand gestures can be enabled to perform different actions like in the camera mode, app can be designed to detect specific gestures to capture a photo.

- In video mode, developers will be able to lay fun graphics on hands like hand emojis for users to make their video calls or recording more expressive and fun.

- The revolutionary functionality of the feature can be used to draw on the smartphone’s screen without touching it and from a distance by pinching finger tips in shape of a one.

However, Keating also listed down the accuracy considerations which the developers need to be mindful of. As limitations, APIs will not detect hand poses near the edges of the screen, parallel of viewing direction, gloves, and sometimes may detect feet as hands.

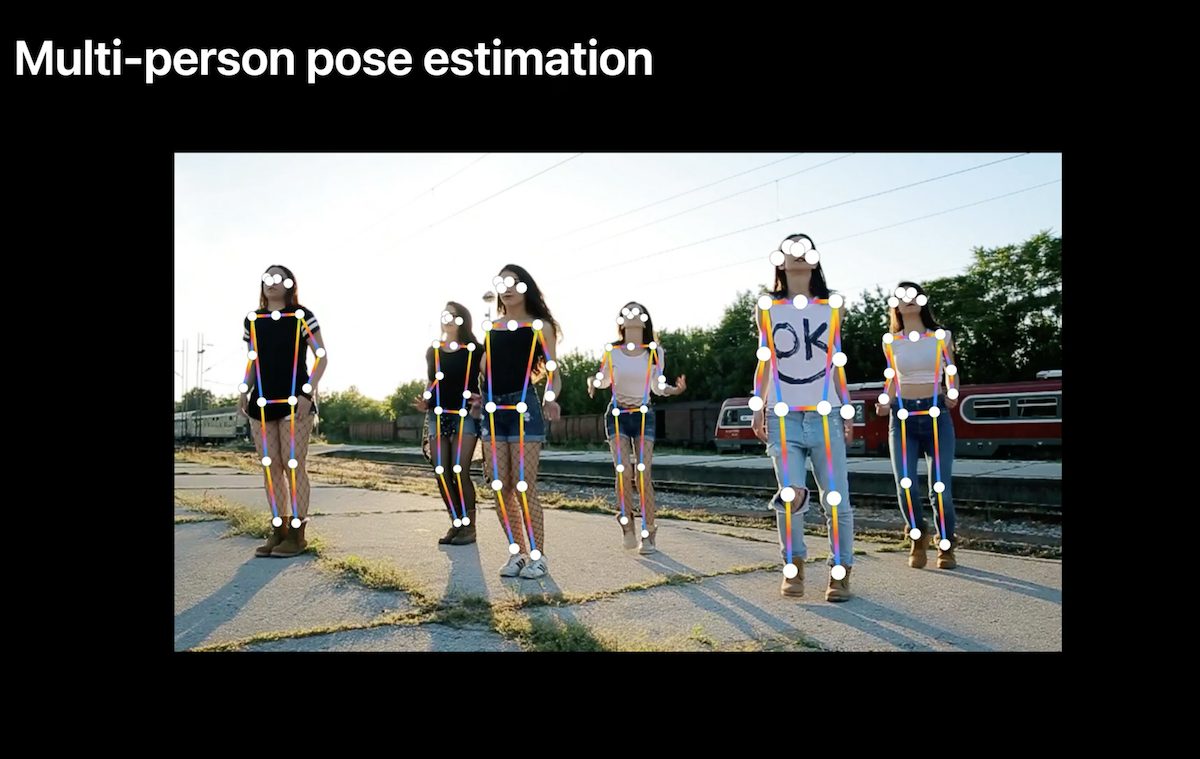

Body Pose

Using the same detection framework as hand pose, the technology can now detect multiple person pose estimation. Human body pose APIs can be used to create a variety of shots features and apps.

As human body pose from Vision can be used offline, engineer Brett Keating presented the following implementation of the new API:

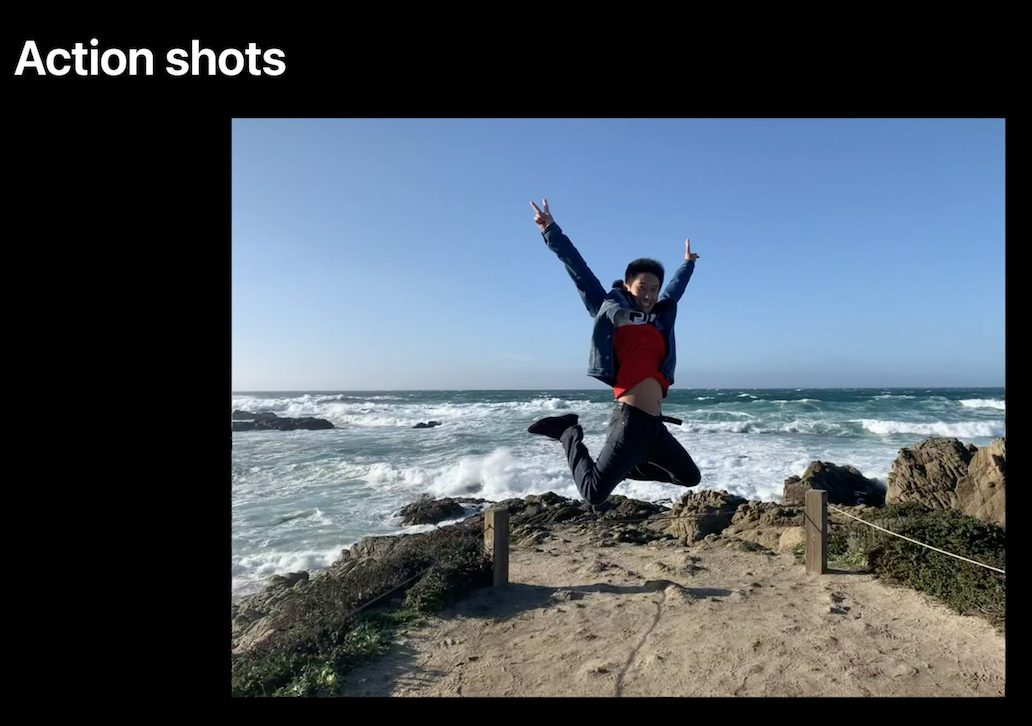

- Design ‘Better action Shots’ to identify and save the most interesting part of an action as a photograph.

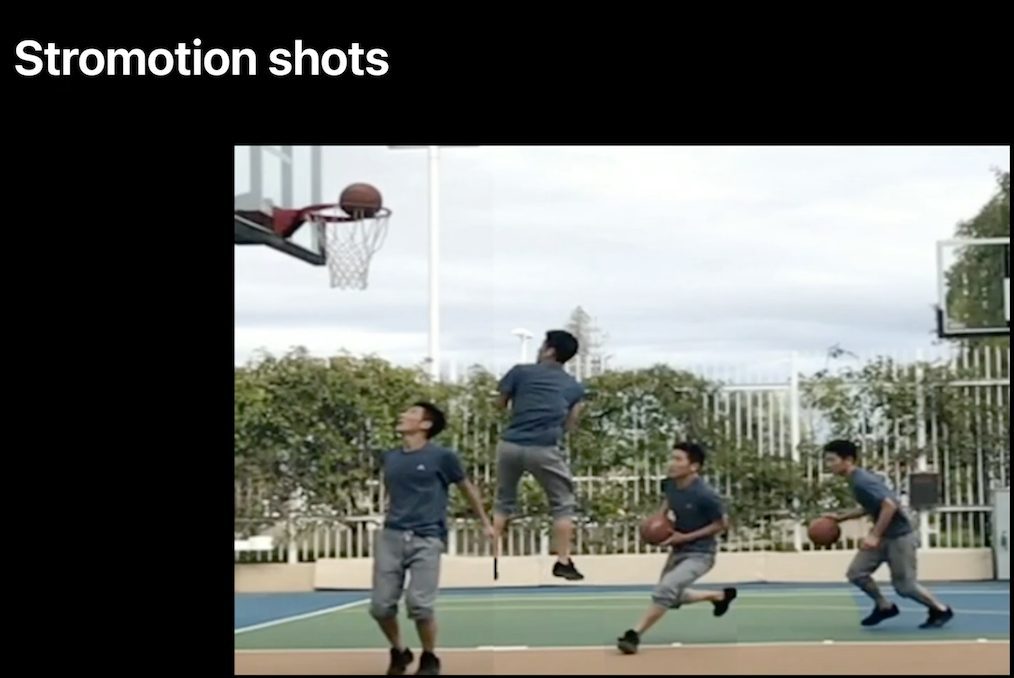

- Create Stromotion shots of actions which do not over lap.

- Training and ergonomic apps.

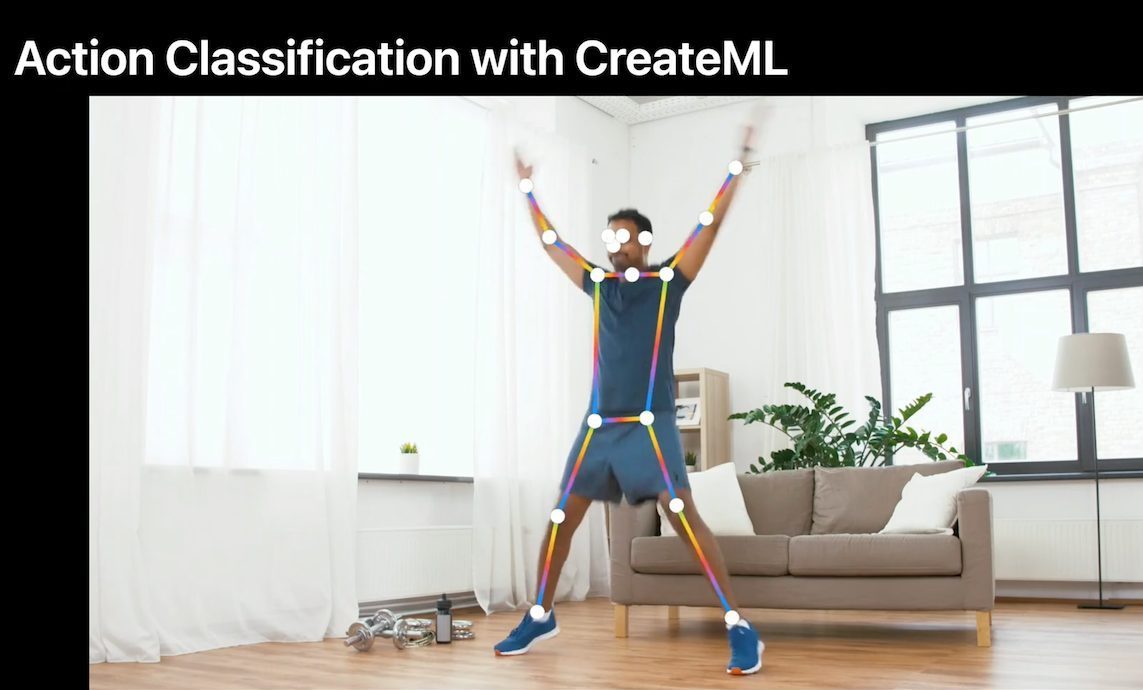

- Design fitness apps via action classification through CreateML.

Developers were notified of the consideration for body pose estimation in the following positions and clothing:

- Flowing or robe like clothing

- Bent over or upside down

- Near edges of screen

- People occluding each other

- Some tracking considerations as for hand pose

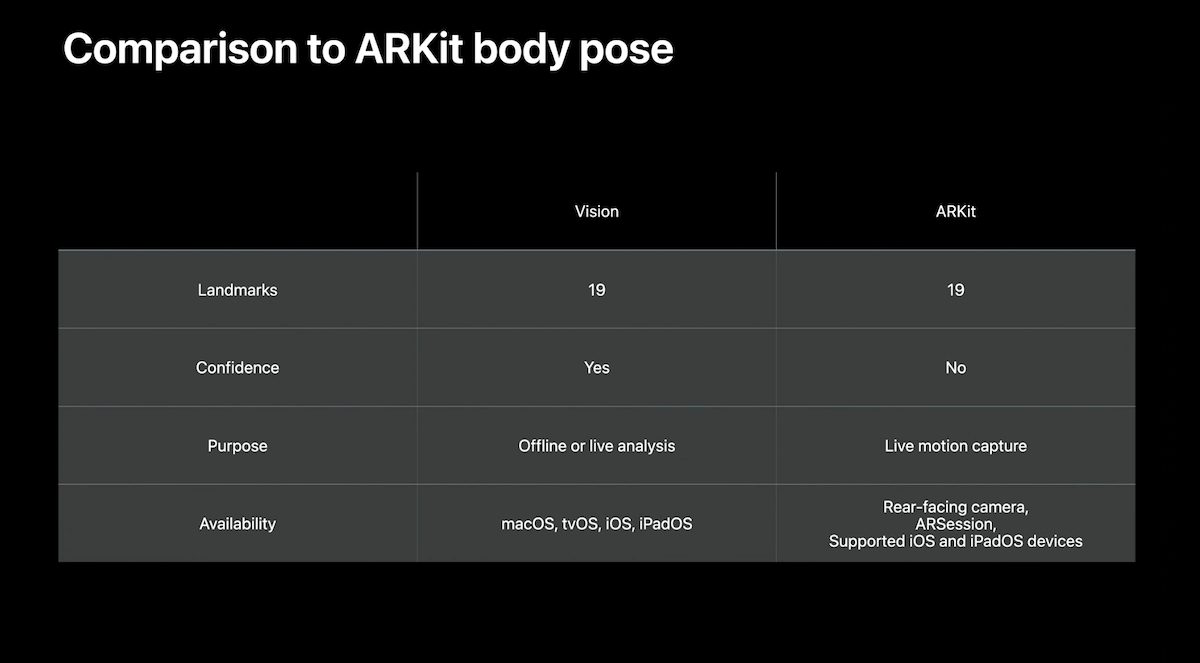

The video session concluded with an apt differentiation of Vision Framework and ARKit to express the formers utility to developers.

Apple has improved the Vision Framework by many times this year; Starting the journey with face detection, detection of upside down faces, face landmark detection, human torso detection, the technology now offers features to be used in still photos and camera feeds on iOS and iPadOS. Watch the whole video session here.

Read Also:

1 comment

Comments are closed.